Monday, 27 November 2023

How to check if a port is opening?

Thursday, 16 November 2023

How to write a video using gstreamer with opencv

import cv2, os

gstcmd = "https://test-videos.co.uk/vids/bigbuckbunny/mp4/h264/1080/Big_Buck_Bunny_1080_10s_1MB.mp4" #cap = cv2.VideoCapture(gstcmd, cv2.CAP_GSTREAMER) cap = cv2.VideoCapture(gstcmd) count = 0 h = 1080 w = 1920 gst_out = "appsrc ! video/x-raw, format=BGR ! queue ! nvvideoconvert ! nvv4l2h264enc ! h264parse ! qtmux ! filesink location=/data/test.mp4" out= cv2.VideoWriter(gst_out, cv2.CAP_GSTREAMER, 0, float(60), (int(1920), int(1080))) while(True): try: count += 1 # Capture frame-by-frame ret, frame = cap.read() if ret == False: break #frame = np.fromstring(frame, dtype=np.uint8).reshape((int(h*3/2),w,1)) #frame = cv2.cvtColor(frame, cv2.COLOR_YUV2BGR_NV12) print(frame.shape) out.write(frame) # Display the resulting frame #cv2.imshow('frame',frame) #if cv2.waitKey(1) & 0xFF == ord('q'): # break except: try: cap = cv2.VideoCapture(gstcmd, cv2.CAP_GSTREAMER) except: print("rtsp connection is down %d..." % (count)) # When everything done, release the capture cap.release() cv2.destroyAllWindows()

Wednesday, 18 October 2023

How to view image quickly from terminal ubuntu?

open a terminal

sudo apt install feh

feh image.jpg

Wednesday, 6 September 2023

How to allow standard os user to add or remove a wifi without sudo?

Tuesday, 15 August 2023

how to check or modify the network card speed in ubuntu?

ref: https://phoenixnap.com/kb/ethtool-command-change-speed-duplex-ethernet-card-linux

sudo apt install ethtool

sudo ethtool enp4s0

sudo ethtool -s enp4s0 autoneg on speed 1000 duplex full

sudo ethtool enp4s0

Settings for enp4s0:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Link partner advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Link partner advertised pause frame use: No

Link partner advertised auto-negotiation: Yes

Link partner advertised FEC modes: Not reported

Speed: 10Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 0

Transceiver: internal

Auto-negotiation: on

MDI-X: Unknown

Supports Wake-on: pumbg

Wake-on: g

Current message level: 0x00000033 (51)

drv probe ifdown ifup

Link detected: yes

Wednesday, 2 August 2023

How to check ping able IP address in total using a bash script?

# Array of IP addresses to ping

ip_addresses=(

192.168.1.1

192.168.1.2

192.168.1.3

)

# Variables to count successful and unsuccessful pings

ping_success=0

ping_fail=0

# Function to perform the ping and update the counters

ping_ips() {

for ip in "${ip_addresses[@]}"; do

ping -c 1 "$ip" > /dev/null 2>&1

if [ $? -eq 0 ]; then

echo "Ping to $ip succeeded"

((ping_success++))

else

echo "Ping to $ip failed"

((ping_fail++))

fi

done

}

# Call the function

ping_ips

# Print the results

echo "Total IPs: ${#ip_addresses[@]}"

echo "Ping Success: $ping_success"

echo "Ping Fail: $ping_fail"

Thursday, 22 June 2023

How to change the docker default gateway and ip?

ref: chatgpt

To change the gateway IP address for an NGINX Docker container, you can make use of Docker's network functionality. Follow these steps:

Find the name or ID of the Docker network associated with your NGINX container. You can use the

docker network lscommand to list the available networks and identify the one that your container is connected to.Use the

docker network inspectcommand to retrieve detailed information about the network. Replace<network-name>with the name or ID of the network from the previous step. For example:- docker network inspect <network-name>

- Look for the "Containers" section in the output of the

docker network inspectcommand. Find the entry that corresponds to your NGINX container. Note down the "Container ID" value. - Stop the NGINX container using the

docker stopcommand. Replace<container-id>with the Container ID obtained in the previous step: - docker stop <container-id>

- Disconnect the NGINX container from the network using the

docker network disconnectcommand. Replace<network-name>and<container-id>with the appropriate values: - docker network disconnect <network-name> <container-id>

- Create a new network with the desired gateway IP address using the

docker network createcommand. Replace<new-network-name>and<new-gateway-ip>with your desired values: - docker network create --subnet=<new-gateway-ip>/16 --gateway=<new-gateway-ip> <new-network-name>

- Reconnect the NGINX container to the new network using the

docker network connectcommand. Replace<new-network-name>and<container-id>with the appropriate values: - docker network connect <new-network-name> <container-id>

- Start the NGINX container again:

- docker start <container-id>

After following these steps, your NGINX container should be connected to the new network with the specified gateway IP address. Please note that this approach assumes you have administrative privileges and sufficient Docker knowledge to manage networks and containers.

Monday, 19 June 2023

How to create a kafka service using docker and python?

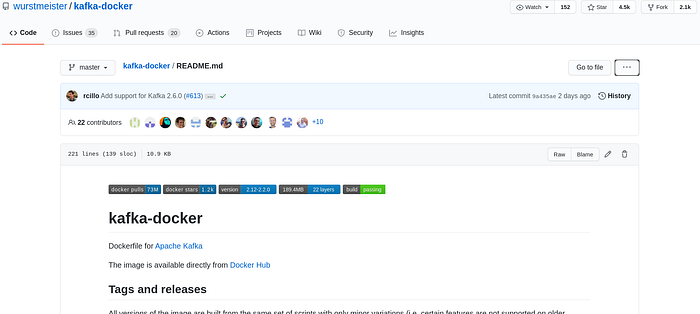

ref: https://towardsdatascience.com/kafka-docker-python-408baf0e1088

Apache Kafka: Docker Container and examples in Python

How to install Kafka using Docker and produce/consume messages in Python

Apache Kafka is a stream-processing software platform originally developed by LinkedIn, open sourced in early 2011 and currently developed by the Apache Software Foundation. It is written in Scala and Java.

Key Concepts of Kafka

Kafka is a distributed system that consists of servers and clients.

- Some servers are called brokers and they form the storage layer. Other servers run Kafka Connect to import and export data as event streams to integrate Kafka with your existing system continuously.

- On the other hand, clients allow you to create applications that read, write and process streams of events. A client could be a producer or a consumer. A producer writes (produces) events to Kafka while a consumer read and process (consumes) events from Kafka.

Servers and clients communicate via a high-performance TCP network protocol and are fully decoupled and agnostic of each other.

But what is an event? In Kafka, an event is an object that has a key, a value and a timestamp. Optionally, it could have other metadata headers. You can think an event as a record or a message.

One or more events are organized in topics: producers can write messages/events on different topics and consumers can choose to read and process events of one or more topics. In Kafka, you can configure how long events of a topic should be retained, therefore, they can be read whenever needed and are not deleted after consumption.

A consumer cosumes the stream of events of a topic at its own pace and can commit its position (called offset). When we commit the offset we set a pointer to the last record that the consumer has consumed.

From the servers side, topics are partitioned and replicated.

- A topic is partitioned for scalability reason. Its events are spread over different Kafka brokers. This allows clients to read/write from/to many brokers at the same time.

- For availability and fault-tolerance every topic can also be replicated. It means that multiple brokers in different datacenters could have a copy of the same data.

For a detailed explanation on how Kafka works, check its official website.

Enough introduction! Let’s see how to install Kafka in order to test our sample Python scripts!

Install Kafka using Docker

As data scientists, we usually find Kafka already installed, configured and ready to be used. For the sake of completeness, in this tutorial, let’s see how to install an instance of Kafka for testing purpose. To this purpose, we are going to use Docker Compose and Git. Please, install them on your system if they are not installed.

In your working directory, open a terminal and clone the GitHub repository of the docker image for Apache Kafka. Then change the current directory in the repository folder.

git clone https://github.com/wurstmeister/kafka-docker.git

cd kafka-docker/Inside kafka-docker, create a text file named docker-compose-expose.yml with the following content (you can use your favourite text editor):

version: '2'

services:

zookeeper:

image: wurstmeister/zookeeper:3.4.6

ports:

- "2181:2181"

kafka:

image: wurstmeister/kafka

ports:

- "9092:9092"

expose:

- "9093"

environment:

KAFKA_ADVERTISED_LISTENERS: INSIDE://kafka:9093,OUTSIDE://localhost:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_LISTENERS: INSIDE://0.0.0.0:9093,OUTSIDE://0.0.0.0:9092

KAFKA_INTER_BROKER_LISTENER_NAME: INSIDE

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_CREATE_TOPICS: "topic_test:1:1"

volumes:

- /var/run/docker.sock:/var/run/docker.sockNow, you are ready to start the Kafka cluster with:

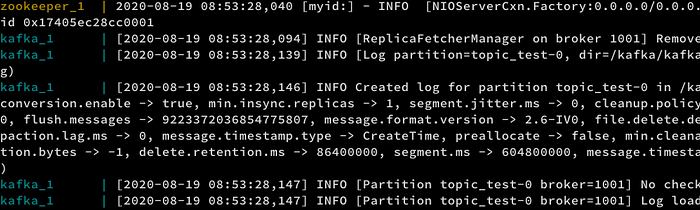

docker-compose -f docker-compose-expose.yml upIf everything is ok, you should see logs from zookeeper and kafka.

In case you want to stop it, just run

docker-compose stopin a separate terminal session inside kafka-docker folder.

For a complete guide on Kafka docker’s connectivity, check it’s wiki.

Producer and Consumer in Python

In order to create our first producer/consumer for Kafka in Python, we need to install the Python client.

pip install kafka-pythonThen, create a Python file called producer.py with the code below.

from time import sleep

from json import dumps

from kafka import KafkaProducerproducer = KafkaProducer(

bootstrap_servers=['localhost:9092'],

value_serializer=lambda x: dumps(x).encode('utf-8')

)for j in range(9999):

print("Iteration", j)

data = {'counter': j}

producer.send('topic_test', value=data)

sleep(0.5)In the code block above:

- we have created a KafkaProducer object that connects of our local instance of Kafka;

- we have defined a way to serialize the data we want to send by trasforming it into a json string and then encoding it to UTF-8;

- we send an event every 0.5 seconds with topic named “topic_test” and the counter of the iteration as data. Instead of the couter, you can send anything.

Now we are ready to start the producer:

python producer.pyThe script should print the number of iteration every half second.

[...]

Iteration 2219

Iteration 2220

Iteration 2221

Iteration 2222

[...]Let’s leave the producer terminal session running and define our consumer in a separate Python file named consumer.py with the following lines of code.

from kafka import KafkaConsumer

from json import loads

from time import sleepconsumer = KafkaConsumer(

'topic_test',

bootstrap_servers=['localhost:9092'],

auto_offset_reset='earliest',

enable_auto_commit=True,

group_id='my-group-id',

value_deserializer=lambda x: loads(x.decode('utf-8'))

)for event in consumer:

event_data = event.value

# Do whatever you want

print(event_data)

sleep(2)In the script above we are defining a KafkaConsumer that contacts the server “localhost:9092 ” and is subscribed to the topic “topic_test”. Since in the producer script the message is jsonfied and encoded, here we decode it by using a lambda function in value_deserializer. In addition,

- auto_offset_reset is a parameter that sets the policy for resetting offsets on OffsetOutOfRange errors; if we set “earliest” then it will move to the oldest available message, if “latest” is set then it will move to the most recent;

- enable_auto_commit is a boolean parameter that states whether the offset will be periodically committed in the background;

- group_id is the name of the consumer group to join.

In the loop we print the content of the event consumed every 2 seconds. Instead of printing, we can perfom any task like writing it to a database or performing some real time analysis.

At this point, if we run

python consumer.pywe should receive as output something like:

{'counter': 0}

{'counter': 1}

{'counter': 2}

{'counter': 3}

{'counter': 4}

{'counter': 5}

{'counter': 6}

[...]The complete documentation of parameters of Python producer/consumer classes can be found here.

Now you are ready to use Kafka in Python!

References

- https://kafka.apache.org/

- https://github.com/wurstmeister/kafka-docker

- https://kafka-python.readthedocs.io/en/master/index.html

- https://medium.com/big-data-engineering/hello-kafka-world-the-complete-guide-to-kafka-with-docker-and-python-f788e2588cfc

- https://towardsdatascience.com/kafka-python-explained-in-10-lines-of-code-800e3e07dad1

Thursday, 15 June 2023

How to observe the RTSP stream using tshark?

Ref: https://docs.nvidia.com/metropolis/deepstream/dev-guide/text/DS_NTP_Timestamp.html

Example method to check if RTSP source sends RTCP sender reports using a tool like tshark:

Note

We assume RTSP source has IP address

192.168.1.100

Install tshark on a host:

sudo apt-get install tsharkFind the host network interface that would be receiving the RTP/RTCP packets:

$ sudo tshark -D ... eno1 ...Start the monitoring using tshark tool. Replace the network interface and source IP as applicable:

$ sudo tshark -i eno1 -f "src host 192.168.1.100" -Y "rtcp"On the same host, start streaming from the RTSP source only after starting the monitoring tool. Any client may be used:

$ gst-launch-1.0 rtspsrc location= <RTSP URL e.g. rtsp://192.168.1.100/stream1> ! fakesinkThe output of the tshark monitoring tool should have lines containing

"Sender Report Source description". Here is a sample output:6041 10.500649319 192.168.1.100 → 192.168.1.101 RTCP 94 Sender Report Source description

How to compress PDF in ubuntu using gs (Ghostscript)?

create a file named as main.sh:-

#!/bin/sh

-sDEVICE=pdfwrite \

-dCompatibilityLevel=1.3 \

-dPDFSETTINGS=/screen \

-dEmbedAllFonts=true \

-dSubsetFonts=true \

-dColorImageDownsampleType=/Bicubic \

-dColorImageResolution=72 \

-dGrayImageDownsampleType=/Bicubic \

-dGrayImageResolution=72 \

-dMonoImageDownsampleType=/Bicubic \

-dMonoImageResolution=72 \

-sOutputFile=out.pdf \

$1

Then, run the script as following:-

bash main.sh input.pdf

Thursday, 25 May 2023

How to attach a docker container in visual studio code? CMake debug?

- Install the "C++", "Dev Containers", "CMake", "CMake Tools" under marketplace

- After that, crtl + shift + p and search "attach"

- Then, you can create a root folder, ".vscode" and create a new file named as "settings.json" as below:-

{

"cmake.debugConfig": {

"args": [

"arg1",

"arg2",

"--flag",

"value"

]

}

}

4. Finally, crtl + shift + p -> CMake Debug

Wednesday, 24 May 2023

error: (-217:Gpu API call) no kernel image is available for execution on the device in function 'call'

Error:

terminate called after throwing an instance of 'cv::Exception'

- 7.5 is for 2080ti

- 8.6 is for 3090

- 8.9 is for 4090

Thursday, 18 May 2023

How to use cnpy to cross the value between cpp and python?

CPP Version

#include"cnpy.h"

#include "opencv2/core.hpp"

#include "opencv2/imgproc.hpp"

#include "opencv2/highgui.hpp"

using namespace cv;

int main()

{

Mat img(100, 100, CV_32FC3);

randu(img, Scalar(0, 0, 0), Scalar(255, 255, 255));

std::cout << img << std::endl;

img = img/255.0;

std::cout << img << std::endl;

float* tmp = (float*)img.data;

cnpy::npy_save("img.npy", tmp, {100,100,3},"w");

// cv::imwrite("img1.jpg", img);

}

Python Version

import numpy as np

import cv2

temp = np.load("img.npy")

print(temp)

# cv2.imwrite("img2.jpg", temp)

How to rsync from one machine to another machine?

sshpass -p "PASSWORD" rsync -avzh LOCAL_PATH -e 'ssh -p PORT' USERNAME@IP_ADDRESS:REMOTE_PATH

Wednesday, 17 May 2023

How to listen or telnet between 2 ubuntu PCs, to verify firewall in ubuntu pc?

one way is to use telnet in a ubuntu pc

telnet <IP address> <Port>

telnet 44.55.66.77 9090

another way is to use nc for listening to a port

in the host pc: nc -l 9090

in the remote pc: nc 44.55.66.77 9090

then, type anything on remote pc, you should see it in host pc

If you want to check it from windows, open PowerShell:-

Test-NetConnection -ComputerName host -Port port

Test-NetConnection -ComputerName 192.168.0.1 -Port 80

Tuesday, 9 May 2023

How to share internet between ubuntu pc using a usb wifi dongle?

pcA: ubuntu with usb dongle, with internet

pcB: ubuntu without usb dongle, no internet

step1: check the IP address of pcA if change the IPv4 Method to "Shared to other computers". Prior to this, pcA should have a default IP address and we named it as pcAip1.

Please take note that do not change any setting on "USB Ethernet" because it is an internet connection.

You should change the setting only on "PCI Ethernet".

Open setting of "PCI Ethernet", select IPv4, select "Shared to other computers" under IPv4 Method.

Once it is done, just click Apply.

Then, open terminal and type "nmcli dev show eth0" where eth0 is the network interface name of "PCI Ethernet", sometime it is named as "enp8s0", "enp3s0", etc.

You should get IP address of pcA under "Shared to other computers" condition and we named it as pcAip2:-

IP4.ADDRESS[1]: 10.42.0.1/24

You will see a different IP address if you change the "Shared to other computers" to Automatic or Manual, which is pcAip1. Write down the pcAip2.

Change it back to manual IP addresss so that you can connect to pcB using original IP.

step2: ssh and login to pcB using default IP address or pcBip1, type "sudo nmtui", edit connection of wired connection

change the setting from before to after as following:-

before, we have pcBip1: 10.201.56.19

after, we have pcBip2: 10.42.0.2

where you should define a temporary IP address of pcB which is 10.42.0.2/24, Gateway you should put pcAip2 which is 10.42.0.1 without 24, DNS servers also the same.

Once it is done, you can reboot pcB.

step3: now from pcA, change again the IPv4 method from manual to "Shared to other computers".

Open terminal, you can try to ssh login pcB using pcBip2 address. Once you login, you can try to ping www.google.com. If you able to receive a reply from Google, then congrats. If not, something wrong with your setting, try again.

step4: now in pcB, you have done your business with internet connection, you want to switch it back to original setting which is from pcBip2 to pcBip1. You can just reverse the setting in step2. Then, you can reboot pcB.

step5: back to pcA, you can restore the PCI Ethernet setting back to pcAip1. You should able to connect pcBip1 as usual, but pcB should not have any internet now

Monday, 1 May 2023

How to check pytorch installation error?

import torch

torch.backends.cuda.matmul.allow_tf32 = True

torch.backends.cudnn.benchmark = True

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.allow_tf32 = True

data = torch.randn([1, 512, 120, 67], dtype=torch.float, device='cuda', requires_grad=True)

net = torch.nn.Conv2d(512, 512, kernel_size=[3, 3], padding=[1, 1], stride=[1, 1], dilation=[1, 1], groups=1)

net = net.cuda().float()

out = net(data)

out.backward(torch.randn_like(out))

torch.cuda.synchronize()

Tuesday, 28 March 2023

How to resolve the following c++ compilation error?

CUDA error: no kernel image is available for execution on the device

> check the cuda architecture version used in opencv CMakeLists.txt, 30 series should 8.6, 20 series should be 7.5

CMake Error: The following variables are used in this project, but they are set to NOTFOUND.

Please set them or make sure they are set and tested correctly in the CMake files:

CUDA_cublas_device_LIBRARY (ADVANCED)

linked by target "caffe" in directory /home/jakebmalis/Documents/openpose/3rdparty/caffe/src/caffe

> check the cmake version, suggest to use 3.21, search how to install cmake

Sunday, 26 March 2023

How to install and update libreoffice templates?

https://github.com/dohliam/libreoffice-impress-templates/wiki/Ubuntu-Debian-Install-Guide

Monday, 13 March 2023

How to backup journald daily?

#!/bin/bash

# Set the backup directory

BACKUP_DIR=/logs

# Create the backup directory if it doesn't exist

mkdir -p $BACKUP_DIR

# Get the current date in the format "YYYY-MM-DD"

DATE=$(date +%Y-%m-%d)

PREV_DATE=$(date -u -d "1 day ago 00:00:01" +"%Y-%m-%d %H:%M:%S")

# Loop through all running containers and backup their logs

for container in $(docker ps --format '{{.Names}}'); do

journalctl -b CONTAINER_NAME=$container --since "$PREV_DATE" >> $BACKUP_DIR/$container-$DATE.log

done

Friday, 10 February 2023

How to use Python with Telegram bot?

step1: create a new bot with Telegram botfather

/newbot

test_bot

test_bot

(get the telegram bot token, <telegramBotToken>)

step2: check the new bot chat id, type the following url in the browser, https://api.telegram.org/bot<telegramBotToken>/getUpdates?offset=0

send a message to the new bot in telegram

refresh the browser to get the chat id

(get the telegram bot chat id, <telegramChatID>)

step3: add the bot into a new group and send a message in the group. Then, refresh step2 above

you will see the group chat id, copy and paste into your Python code

step4: use a Python code to verify Telegram bot

import requests

TOKEN = "<telegramBotToken>"

chat_id = "<telegramChatID>"

message = "hello from your telegram bot"

url = f"https://api.telegram.org/bot{TOKEN}/sendMessage?chat_id={chat_id}&text={message}"

print(requests.get(url).json()) # this sends the message

Sunday, 8 January 2023

How to save docker image locally using docker compose and then install it in another server offline?

Download files and images

To install Milvus offline, you need to pull and save all images in an online environment first, and then transfer them to the target host and load them manually.

- Download an installation file.

- For Milvus standalone:

//github.com/milvus-io/milvus/releases/download/v2.2.2/milvus-standalone-docker-compose.yml -O docker-compose.yml- For Milvus cluster:

//github.com/milvus-io/milvus/releases/download/v2.2.2/milvus-cluster-docker-compose.yml -O docker-compose.yml- Download requirement and script files.

//raw.githubusercontent.com/milvus-io/milvus/master/deployments/offline/requirements.txt- Pull and save images.

pip3 install -r requirements.txt

python3 save_image.py --manifest docker-compose.yml

/images folder.

- Load the images.

cdInstall Milvus offline

Having transferred the images to the target host, run the following command to install Milvus offline.

docker-compose -f docker-compose.yml up -d

Uninstall Milvus

To uninstall Milvus, run the following command.

docker-compose -f docker-compose.yml down

===

requirements.txt:-

docker==5.0.0

nested-lookup==0.2.22

===save_image.py:-

import argparse

import docker

import gzip

import os

import yaml

from nested_lookup import nested_lookup

if __name__ == "__main__":

parser = argparse.ArgumentParser(

description="Save Docker images")

parser.add_argument("--manifest",

required=True,

help="Path to the manifest yaml")

parser.add_argument("--save_path",

type=str,

default='images',

help='Directory to save images to')

arguments = parser.parse_args()

with open(arguments.manifest, 'r') as file:

template = file.read()

images=[]

parts = template.split('---')

for p in parts:

y = yaml.safe_load(p)

matches = nested_lookup("image", y)

if (len(matches)):

images += matches

save_path = arguments.save_path

if not os.path.isdir(save_path):

os.mkdir(save_path)

client = docker.from_env()

for image_name in set(images):

file_name = (image_name.split(':')[0].replace("/", "-"))

f = gzip.open(save_path + "/" + file_name + '.tar.gz', 'wb')

try:

image = client.images.get(image_name)

if image.id:

print ("docker image \"" + image_name + "\" already exists.")

except docker.errors.ImageNotFound:

print ("docker pull " + image_name + " ...")

image = client.images.pull(image_name)

image_tar = image.save(named=True)

f.writelines(image_tar)

f.close()

print("Save docker images to \"" + save_path + "\"")

===docker-compose.yml:-

version: '3.5'

services:

etcd:

container_name: milvus-etcd

image: quay.io/coreos/etcd:v3.5.0

environment:

- ETCD_AUTO_COMPACTION_MODE=revision

- ETCD_AUTO_COMPACTION_RETENTION=1000

- ETCD_QUOTA_BACKEND_BYTES=4294967296

- ETCD_SNAPSHOT_COUNT=50000

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/etcd:/etcd

command: etcd -advertise-client-urls=http://127.0.0.1:2379 -listen-client-urls http://0.0.0.0:2379 --data-dir /etcd

minio:

container_name: milvus-minio

image: minio/minio:RELEASE.2022-03-17T06-34-49Z

environment:

MINIO_ACCESS_KEY: minioadmin

MINIO_SECRET_KEY: minioadmin

ports:

- "9001:9001"

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/minio:/minio_data

command: minio server /minio_data --console-address ":9001"

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost:9000/minio/health/live"]

interval: 30s

timeout: 20s

retries: 3

standalone:

container_name: milvus-standalone

image: milvusdb/milvus:v2.2.2

command: ["milvus", "run", "standalone"]

environment:

ETCD_ENDPOINTS: etcd:2379

MINIO_ADDRESS: minio:9000

volumes:

- ${DOCKER_VOLUME_DIRECTORY:-.}/volumes/milvus:/var/lib/milvus

ports:

- "19530:19530"

- "9091:9091"

depends_on:

- "etcd"

- "minio"

networks:

default:

name: milvus