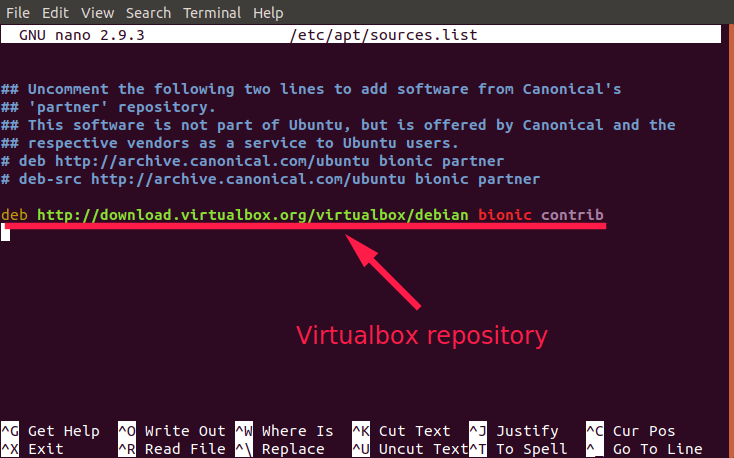

reference: https://c-nergy.be/blog/?p=13390

Hello World,

Based on the feedback we have received through this blog, it seems that there is some changes that has been introduced in Ubuntu 18.04.2 which

seems to break the xRDP capability and people cannot perform the remote

desktop connection anymore. After being presented with the xRDP login

page, only the green background screen is presented to you and will

fail eventually. This issue will occur if you perform a manual

installation or if you use the latest version of Std-Xrdp-Install-5.1.sh script.

This post will explain what’s needs to be done in order to fix this issue. So, let’s go !

Overview

Ubuntu 18.04.2 has been

released and more and more people are noticing that after installing

the xRDP package, they are not able to connect to the desktop interface

through remote desktop connection software. Apparently, Ubuntu 18.04.2

has introduced some changes that preventing xRDP package to work as

expected. We have performed a manual installation to see what could be the problem.

Problem Description

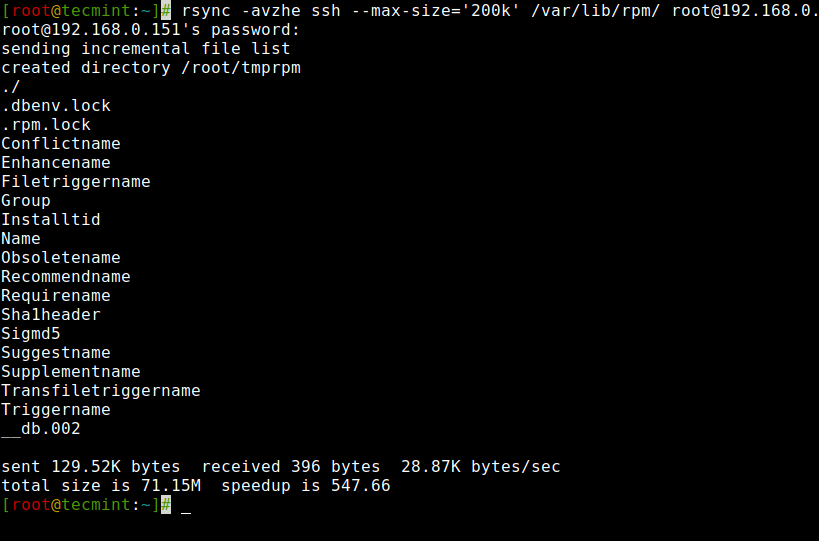

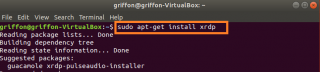

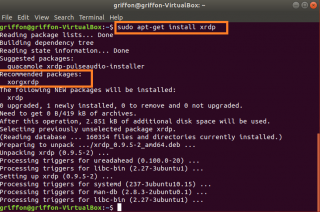

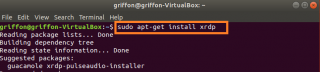

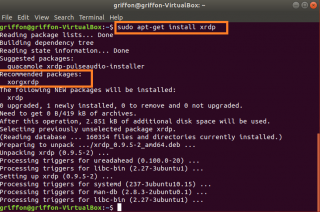

So, to perform a manual installation, we have opened a terminal console and we have issued the following command

sudo apt-get install xrdp

Click on Picture for Better Resolution

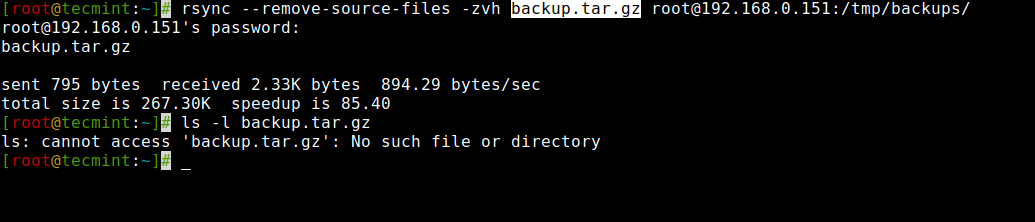

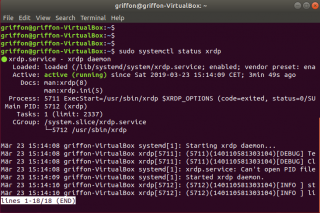

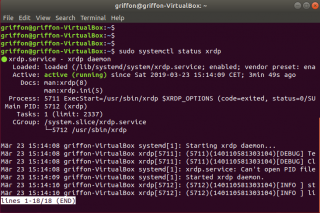

After having performed the installation, we have checked that the xrdp service was running using the following commane

sudo systemctl status xrdp

Click on Picture for Better Resolution

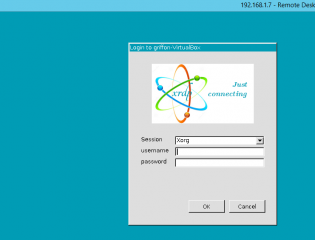

So far, everything seems to be working

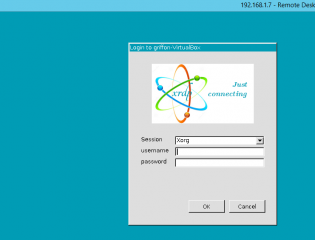

as expected. So, we moved to a windows computer, fired up the remote

desktop client and as we can see in the screenshot, we are presented

with the xrdp login page

Click on Picture for Better Resolution

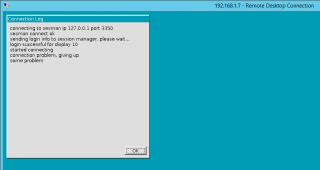

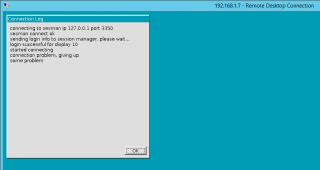

After entering our credentials, we only see a green background page and nothing happens. After a certain amount of time (several minutes), you should see the following error message

connection problem,giving up

some problem

Click on Picture for Better Resolution

Resolution process

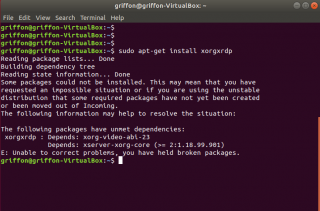

After looking into the logs, it seems that the xorgxrdp component of xRDP

is not working as expected. When we have performed the installation of

the xRDP package, we have noticed that information displayed in the

console mentionning that xorgxrdp package is needed (see screenshot

below). So, when performing the xrdp installation, it seems that the xorgxrdp package is not installed anymore.

Click on Picture for Better Resolution

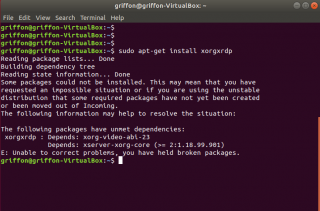

So, it’s seems that the issue

encountered is due to the fact that the xorgxrdp package is not

installed. So, moving forward, we have decided to install the xorgxrdp

package manually just after installing the xrdp package. So, we have

issued the following command in a Terminal console

sudo apt-get install xorgxrdp

Issuing this command will not perform the installation as there are some dependencies errors.

Click on Picture for Better Resolution

We have just found the root cause issue.

The xorgxrdp package cannot be installed because of some missing dependencies. Because we have no xorgxrdp component installed on the computer, it seems logical that when we perform a remote connection, we are never presented with the Ubuntu desktop…

Fixing xRDP on Ubuntu 18.04.2

If you are performing a brand new xRDP installation or if you have

installed xRDP and you are encountering the issue, you will need to

perform the following actions

New xRDP installation Scenario

So, let’s go into more details. Let’s assume, you have performed a fresh installation of Ubuntu 18.04.2 and you want to install xRDP package through a manual installation, you will need to perform the following actions.

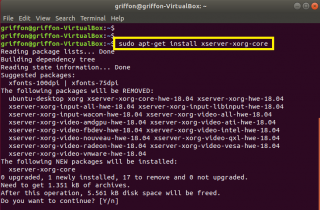

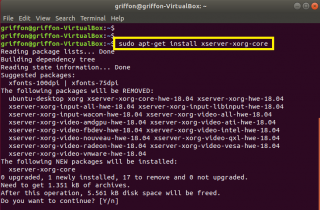

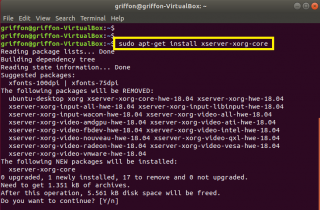

Step 1 – Install xserver-xorg-core by issuing the following command

sudo apt-get install xserver-xorg-core

Click on Picture for Better Resolution

Note : You will notice that installing this package will trigger removal of packages *xserver-xorg-hwe-18.04*

which might be used or needed by your system… So, you might loose

keyboard and mouse input when connecting locally to the machine. To fix

this issue, you will have to issue the following command just after

this one

sudo apt-get -y install xserver-xorg-input-all

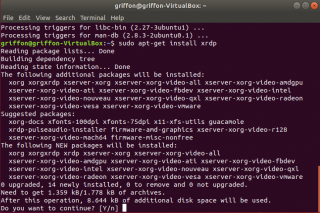

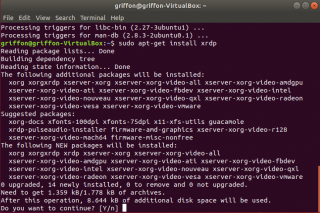

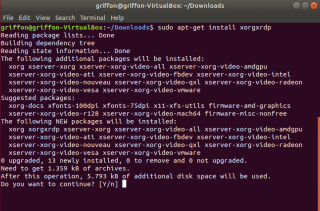

Step 2 – Install xRDP package

sudo apt-get install xrdp

In the screenshot, you can see that because there is no more

dependencies issues, the xorgxrdp package is listed to be installed

along with the xRDP package

Click on Picture for Better Resolution

Fixing xRDP installed package

If you have performed the installation of xRDP packages on Ubuntu 18.04.2 using the Std-Xrdp-install-0.5.1.sh script, in

order to restore the xrdp functionality, you will need to simply

install the missing dependencies by issuing the following command

sudo apt-get install xserver-xorg-core

Click on Picture for Better Resolution

Note : Again, you will notice that installing this package will trigger removal of 17 packages *xserver-xorg*-hwe-18.04* which might be used or needed by your system…So,

you might loose keyboard and mouse input when connecting locally to the

machine. To fix this issue, you will have to issue the following

command just after this one

sudo apt-get -y install xserver-xorg-input-all

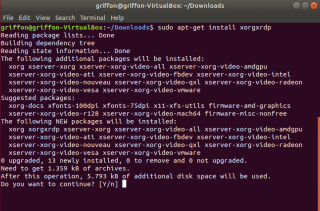

After installing the missing dependencies, you will need to manually

install the xorgxrdp package in order to restore the xRDP functionality

sudo apt-get install xorgxrdp

Click on Picture for Better Resolution

When this is done, you will be able to perform your remote connection against your Ubuntu 18.04.2

Click on Picture for Better Resolution

Fixing keyboard and mouse issues in Ubuntu 18.04.2

After installing xRDP package using the recipe above or if you have used the custom installation script

(version 2.2), you might encounter another issue. When login in locally

on the ubuntu machine, you will notice that you have lost keyboard and

mouse interaction. Again, as explained above, the fix is quite simple,

rune the following command in the terminal session

sudo apt-get -y install xserver-xorg-input-all

Note : As long as you do not reboot after installing the xRDP

package, you will not have any problems. After a reboot, you might

loose keyboard and mouse input on your system.

Final Notes

The addition of the xserver-xorg-hwe-18.04 and associated packages seems

to have introduced some dependencies changes that interfere wit the

xorgxrdp and xrdp packages version available on Ubuntu repository. So,

if you have used the Std-Xrdp-Install-0.5.1.sh script and you are facing this issue, you will need to manually install the xorgxrdp package. If you have used the custom installation script (install-xrdp-2.2.sh), you will not have the issue as the script compile and install the xorgxrdp package separately and we are using the latest xrdp and xorgxrdp package version..However, you might also have the keyboard and mouse problem. Again, you will need to re-install the xserver-xorg-input-all package...

It seems that we will need to update the script in order to provide support for Ubuntu 18.04.2 as Ubuntu 18.04 is a Long Term support release. Please be patient as it might take us some time before we can upload the new version of the script….

Hope this clarify the issue…

Till next time

See ya